| Category | Assignment | Subject | Computer Science |

|---|---|---|---|

| University | University of Westminster | Module Title | 7BUIS008W Data Mining & Machine Learning |

Students are expected to critically justify the use of effective and novel data mining and machine learning techniques for a specific problem domain and definitely reflect on the knowledge of how different data mining and machine learning algorithms operate in terms of their underlying design assumptions and biases for a given problem domain. Students expected to methodically analyse the output of data mining and machine learning algorithms by drawing technically appropriate and sound conclusions resulting from the application of data mining and machine learning algorithms to the given problem

This assignment contributes towards the following Learning Outcomes (LOs):

Refer to section 4 of the “How you study” guide for undergraduate students for a clarification of how you are assessed, penalties and late submissions, what constitutes plagiarism etc.

If you submit your coursework late but within 24 hours or one working day of the specified deadline, 10 marks will be deducted from the final mark, as a penalty for late submission, except for work which obtains a mark in the range 50 – 59%, in which case the mark will be capped at the pass mark (50%). If you submit your coursework more than 24 hours or more than one working day after the specified deadline you will be given a mark of zero for the work in question unless a claim of Mitigating Circumstances has been submitted and accepted as valid.

It is recognised that on occasion, illness or a personal crisis can mean that you fail to submit a piece of work on time. In such cases you must inform the Campus Office in writing on a mitigating circumstances form, giving the reason for your late or non-submission. You must provide relevant documentary evidence with the form. This information will be reported to the relevant Assessment Board that will decide whether the mark of zero shall stand. For more detailed information regarding University Assessment Regulations, please refer to the following University of Westminster, London

There are a lot of clustering algorithms to choose from. The standard sklearn clustering suite has thirteen different clustering classes alone. So, what clustering algorithms should you be using? As with every question in data science and machine learning, it depends on your data. A number of those thirteen classes in sklearn are specialised for certain tasks. Obviously, an algorithm specialising in text clustering is going to be the right choice for clustering text data. Thus, if you know enough about your data, you can narrow down on the clustering algorithm that best suits that kind of data or the sorts of important properties your data has. But what if you don’t know much about your data? If, for example, you are ‘just looking’ and doing some exploratory data analysis (EDA), it is not so easy to choose a specialised algorithm.

So, what algorithm is good for exploratory data analysis?

Some scope (domain) for Exploratory Data Analysis (EDA) with clustering

To start, let’s lay down some ground rules of what we need a good EDA clustering algorithm to do; then, we can set about seeing how the algorithms available stack up.

In this coursework, you will be testing (assessing) various clustering algorithms in various ways. In order to compare the clustering algorithms, you must use the same code implementation to test all of them. Thus, it is critical to use the code provided below to cluster the data and produce results. Since this is an experiment, you will be required to set it up first in two steps: (A) Getting your environment set up and (B) Preparing and Testing Clustering Algorithms.

Note: The dataset provided data.npy is already clean and requires no further preprocessing.

A.Getting the environment set up.

If we are going to compare clustering algorithms, we’ll need a few things: first, some libraries to load and cluster the data, and second, some visualisation tools so we can look at the results of clustering.

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import sklearn.cluster as cluster

import time

%matplotlib inline

sns.set_context('poster')

sns.set_color_codes()

plot_kwds = {'alpha' : 0.7, 's' : 15, 'linewidths':3}

Next, we need some data; download the data.npy from the Blackboard.

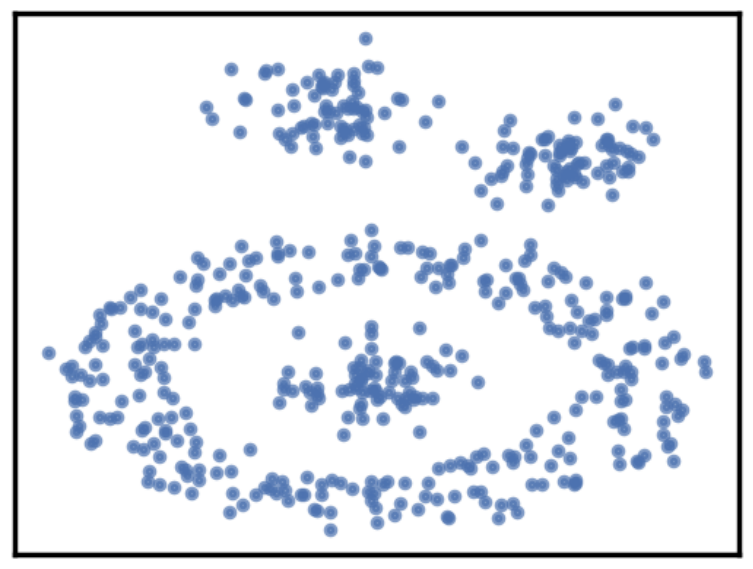

This is an artificial dataset that will give clustering algorithms a challenge – it contains some non-globular clusters, some noise, etc.; the sorts of things we expect to crop up in messy real-world data. So that we can actually visualise clustering, the dataset is two-dimensional; this is not something we expect from real-world data where you generally can’t just visualise and see what is going on. By running the following code, you will be able to visualise the dataset in 2D space, see Figure 1.

data = np.load('/content/data.npy')

So let’s have a look at the data and see what we have.

plt.scatter(data.T[0], data.T[1], c='b', **plot_kwds)

frame = plt.gca()

frame.axes.get_xaxis().set_visible(True)

frame.axes.get_yaxis().set_visible(True)

Are You Looking Solution of 7BUIS008W CW2 Assignment

Order Non Plagiarized Assignment

Fig.1. Visualisation of the coursework dataset ‘data.npy’

It’s messy, but there are certainly some clusters that you can pick out by eye; determining the exact boundaries of those clusters is harder of course, but we can hope that our clustering algorithms will find at least some of those clusters. So, on to testing with Sklearn Clustering Algorithms.

B.Preparing Clustering Algorithms for Testing

To start, let’s set up a little utility function to do the clustering and plot the results for us. When running the utility function call, the data will be clustered automatically for you.

We can also time the clustering algorithm while we’re at it and add that to the plot since we do care about performance.

#This is the clustering and clusters plotting function

def plot_clusters(data, algorithm, args, kwds):

start_time = time.time()

labels = algorithm(*args, **kwds).fit_predict(data)

end_time = time.time()

palette = sns.color_palette('Set3', np.unique(labels).max() + 1)

colors = [palette[x] if x >= 0 else (0.0, 0.0, 0.0) for x in labels]

plt.scatter(data.T[0], data.T[1], c=colors, **plot_kwds)

frame = plt.gca()

frame.axes.get_xaxis().set_visible(True)

frame.axes.get_yaxis().set_visible(True)

plt.title('Clusters found by {}'.format(str(algorithm.__name__)), fontsize=18)

plt.text(0.1, -2.2, 'Clustering took {:.2f} s'.format(end_time - start_time), fontsize=14)

Before we try doing the clustering, there are some things to keep in mind as we look at the results:

a)In real use cases, we can’t look at the data and realise points are not really in a cluster; we have to take the clustering algorithm at its word.

b)This is a small dataset, so poor performance here bodes very badly.

c)To plan your experiments’ preparation with any algorithm, you must establish the function little utility call by modifying plot_clusters (data, algorithm, args, kwds). This means when establishing the little utility call, you need the data, algorithm name, the arguments and the kwrds which you want to experiment with. Note that the arguments and kwrds represent the algorithm’s parameters.

d)The modifications show only the names of the parameters and/or arguments you intend to use in your test. For example,

To establish Agglomerative Clustering with the n-clusters parameter, you document:

plot_clusters (data, Agglomerative Clustering, (), {‘n_clusters’:value}), and so on for the rest of the algorithms and their intended associated parameters for your testing.

e)To execute your test, you load your data, then insert values of your choice for the parameters and arguments in the function call, which were established in the preparation, and run it. For example:

plot_clusters(data, cluster. Agglomerative Clustering, (), {'n_clusters':6, })

Set the Python environment for testing the sklearn clustering algorithms for performing exploratory data analysis outlined in section 1,2. Answer each point in the same given order.

Review and research various published literature articles in the field to advise the clustering function on the (algorithm, args, kwds) values for the following clustering algorithms. You need to justify your chosen parameters and their values. You must include in-text citations for your justification. Answer each point in the same given order.

a.MiniBatchKMeans

b.MeanShift

c.HDBSCAN

d.Agglomerative Clustering

Substituted the kwds and args values in the little utility call syntax. However, you may find using the following table per algorithm useful (using a table is optional but highly recommended).

Execute the following sklearn clustering algorithms as per your setup in tasks 1 & 2. For performing exploratory data analysis, plot the output.

a.MiniBatchKMeans

b.MeanShift

c.HDBSCAN

Agglomerative Clustering

Write a report that critically analyses and summarises the quality of the obtained clusters for each clustering algorithm based on the following aspects:

Present your findings for this task (Task 4) as a technical report. The paper must express your own conclusions and findings. The paper size should be between [950-1500] words, excluding any references and plots. Minimum font size is 10.

Penalty Warning: Papers violating the lower limit or exceeding the upper limit of allowable words will be subject to a penalty of 10% (2 Marks out of 20)

Buy Answer of 7BUIS008W CW2 Assignment & Raise Your Grades

Pay & Buy Non Plagiarized AssignmentGet expert assignment help for 7BUIS008W Data Mining & Machine Learning CW2 Assignment? We specialize in offering high-quality Computer Science Assignment Help, with an option for students to pay our experts to take on their assignment challenges. Need a reference? We also provide a free list of University of Westminster Assignment Examples to help you get started. With years of experience, our writers deliver 100% plagiarism-free content and offer unlimited revisions to meet your needs. Trust us to help you excel in your studies!

Hire Assignment Helper Today!

Let's Book Your Work with Our Expert and Get High-Quality Content