| Category | Assignment | Subject | Computer Science |

|---|---|---|---|

| University | University of Bristol | Module Title | EMATM0051 Large Scale Data Engineering |

This coursework is divided into two parts:

Task 1: A written task (only) to design the architecture of a simple application on AWS cloud, where you are required to have a deep understanding of AWS services and how they work together within an application. The design should demonstrate your knowledge of AWS services covered throughout

the entire LSDE course.

Task 2: A combined practical and written activity architecting a scaling application on the Cloud, where you will be required to use knowledge gained and a little further research to implement the scaling infrastructure, followed by a report that will focus on your experience in the practical activity together

with knowledge gained in the entire LSDE course.

In task 1, we require you to design the architecture for a website application, “MyTravel”, running on AWS cloud. This is your own private website page that share your travel blog. The application needs to meet the following requirements:

• “MyTravel” website page is public which should be accessible globally with low latency and high availability.

• You will regularly upload your travel pictures and words on this website.

• All data (pictures and text) should be automatically backed up in case of disaster.

• A congratulatory email will be sent to you when the total number of website visits exceeds 1,000,000.

You should include your own descriptions of the following, 500-800 words and no more than 2 A4 pages:

• List the AWS services used in your design and explain in detail how these services work to ensure the high-performance, security and cost-efficiency in this application.

• Use a diagram to demonstrate the architecture of this application, especially for showing AWS services interaction and your network design. Please also describe how this application works to back up your data automatically when you upload them.

You don’ t need to implement these ideas in your lab account.

WordFreq is a complete, working application, built using the Go programming language.

[NOTE: you are NOT expected to understand or permitted to modify the source code in any way]

The basic functionality of the application is to count words in a text file. It returns the top ten most frequent words found in a text document and can process multiple text files sequentially.

The application uses a number of AWS services:

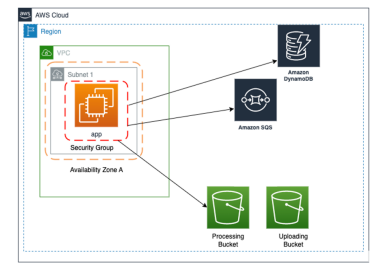

1. S3: There are two S3 buckets used for the application.

-> One is used for uploading and storing original text files from your local machine. This is your uploading bucket.

-> These files will be copied from the uploading bucket to the processing S3 bucket. The bucket has upload notifications enabled, such that when a file is uploaded, a message notification is automatically added to a wordfreq SQS queue.

2. SQS: There are two queues used for the application.

-> One is used for holding notification messages of newly uploaded text files from the S3 bucket. These messages are known as ‘jobs’, or tasks to be performed by the application, and specify the location of the text file on the S3 bucket.

-> A second queue is used to hold messages containing the ‘top 10’ results of the processed jobs.

3. DynamoDB: A NoSQL database table is created to store the results of the processed jobs.

4. EC2: The application runs on an Ubuntu Linux EC2 instance, which you will need to set upinitially following the instructions given. This will include setting up and identifying the S3, SQS and DynamoDB resources to the application.

You will be required to initially set up and test the application, using instructions given with the zip download file. You will then need to implement auto-scaling for the application and improve its architecture based on principles learned in the CF course. Finally, you will write a report covering this process, along with some extra material.

Figure 1 - WordFreq standard architecture

Ensure you have accepted access to your AWS Academy Learner Lab account and have at least $10 credit (you are provided with $50 to start with). If you are running short of credit, please inform your instructor.

Refer to the WordFreq installation instructions (‘README.txt’) in the coursework zip download on the BlackBoard site, to install and configure the application in your Learner Lab account. These instructions do not cover every step – you are assumed to be confident in certain tasks, such as in the use of IAM permissions, launching and connecting via SSH to an EC2 instance, etc.

You will set up the database, storage buckets, queues and worker EC2 instance. Finally, ensure that you can upload a file and can see the results logged from the running worker service, before moving on to the next task.

You will need to give a brief summary of how the application works (without any reference to the code functionality) in this Task.

[NOTE: The application code is in the Go language. You are NOT expected to understand or modify it. Any code changes will be ignored and may lose marks.]

Review the architecture of the existing application. Each job process takes a random time to complete between 10-20 seconds (artificially induced, but DO NOT modify the application source code!). To be able to process multiple uploaded files, we need to add scaling to the application.

This should initially function as follows:

• When a given maximum performance metric threshold is exceeded, an identical worker instance is launched and begins to also process messages on the queues.

• When a given minimum performance metric threshold is exceeded, the most recently launched worker instance is removed (terminated).

• There must always be at least one worker instance available to process messages when the application architecture is 'live'.

• You don’t want to add more instances every 2 minutes.

Using the knowledge gained from the Cloud Foundations course, architect, please implement autoscaling functionality for the WordFreq application and demonstrate how you configure the autoscaling policy. Note that this will not be exactly the same as Lab 6 in Module 10, which is for a web application. You will not need a load balancer, and you will need to identify a different CloudWatch performance metric to use for the ‘scale out’ and ‘scale in’ rules. The 'Average CPU Utilization' metric used in Lab 6 is not necessarily the best choice for this application.

Once you have set up your auto-scaling infrastructure, test that it works. The simplest method is to create around 120 text files. You could use the text files on Blackboard. Please make sure you’ve uploaded all 120 files to your uploading S3 bucket before starting this task.

You can ‘purge’ all files from your processing S3 bucket, then you could copy all the .txt files from you uploading S3 bucket to your processing S3 bucket. Please stop the original instance wordfreq-dev and only use the instances that are created by your auto scaling group.

• Connect to one of your instances that in your Auto Scaling Group (via SSH connection).

• Copy all the .txt file from your uploading S3 bucket (e.g., zj-wordfreq-nov24-uploading) to your processing S3 bucket (e.g., zj-wordfreq-nov24-processing) by running the following command in your SSH terminal:

aws s3 cp s3://<name of your uploading bucket> s3://<name of your processing bucket> --exclude "*" --include "*.txt" --recursive

Please watch and record the following behaviours and illustrate all loading tests done for optimising auto-scaling:

• Watch the behaviour of your application to check the scale out (add instances) and scale in (remove instances) functionality works.

• Take screenshots of your copied files in the S3 bucket, the SQS queue page showing message status, the Auto Scaling Group page showing instance status and the EC2 instance page showing launched / terminated instances during this process.

• Try to optimise the scaling operation, for example so that instances are launched quickly when required and terminated soon (but not immediately) when not required. Note down settings you used and the fastest file processing time you achieved.

• Try using a few different EC2 instance types – with more CPU power, memory, etc. Please record the processing time for each experiment and discuss your findings.

[NOTE: Please delete all the .txt file in your processing S3 bucket after load testing]

[NOTE: Ensure that your WordFreq application’s auto-scaling is still functional when finished!]

[NOTE: The Learner Lab accounts officially only allow a maximum of 9 instances running in one region, including auto-scaling instances. Learner Lab accounts are Limited in which EC2 Types and AWS services they can use. This is explained in the Lab Readme file on the Lab page; section ‘Service usage and other restrictions’.

Please note that you may get your account deactivated if you attempt to violate the service Restrictions.

Based on only AWS services and features learned from the Cloud Foundations course, describe how you could re-design the WordFreq application’s current cloud architecture (i.e. not changing the application’s functionality or code) to improve the architecture in the following areas:

• Increase resilience and availability of the application against component failure.

• Long-term backups of valuable data required.

• Cost-effective and efficient application for occasional use. Processing does not need to be immediate.

• Prevent unauthorised access.

Your description should ideally include diagrams and include the AWS services required together with a high-level explanation of features & configuration for each requirement.

You don’ t need to implement these ideas in your lab account.

Based on services and frameworks covered in the full LSDE course, identify two alternative data processing services that would be far more performant and robust for this processing task. Please describe their advantages over the current version of WordFreq in a few paragraphs.

You don’ t need to implement these ideas in your lab account

Combine Task 1 and Task 2 to a single PDF. You will also need to give us Your AWS Academy account credentials (username, password) at the end of your report.

The report should be a single PDF. It does not need to follow any specific format, but you should use grammar and spelling checkers on it and make good use of paragraphs and sub-headings. Doublespacing is not required. Use diagrams where they make sense and include captions & references from the text.

[IMPORTANT: All text not originally created by you must be cited, leading to a final numbered reference section (based on e.g. the British Standard Numeric System) to avoid accusations of plagiarism.]

[IMPORTANT: Disable autoscaling at end of each lab session: – Desired capacity = 0 ; Minimum capacity = 0. This saves credit and avoids multiple instances from launching and terminating when starting / stopping a lab session]

You are given an AWS Academy Learner Lab account for this coursework. Each account has $50 assigned to it, which is updated every 24 hours and displayed on the Academy Lab page.

To access the lab from AWS Academy, select Courses > AWS Academy Learner Lab [99203]> Modules> AWS Academy Learner Lab> Launch AWS Academy Learner Lab. On this page click ‘Start Lab’ to start a new lab session, then the ‘AWS’ link to open the AWS Console once the button beside the link is green.

Please note:

• Ensure you shut down (stop or terminate) EC2 instances when you are not using them. These will use the most credit in your account in this exercise. Note that the Learner Lab will stop running instances when a session ends, then restart them when a new session begins.

• AWS Learner Lab accounts have only a limited subset of AWS services / features available to them, see the Readme file on the Lab page (Service usage and other restrictions).

• If you have installed the AWS CLI on your PC and wish to access your Learner Lab account, you will need the credentials (access key ID & secret access key) shown by pressing the AWS Details button on the Lab page. Note that these only remain valid for the current session.

• If you have any issues with AWS Academy or the Learner Lab, please book an Office Hours session or use the LSDE Discussion Forums to seek help FIRST, email the instructors if there is no other option.

Are you looking for reliable Assignment help UK for EMATM0051 Large Scale Data Engineering, Assignment? Our expert team is here to assist with all your academic needs. Whether it’s a complex assignment or a dissertation, we provide human-made content tailored to your requirements. We also offer dissertation writing help for both PG and UG courses. Unlike others, we do not use AI language, ensuring high-quality, original work. Simply follow the easy sign-up process to connect with us and get professional free sample solutions for your assignments.

Hire Assignment Helper Today!

Let's Book Your Work with Our Expert and Get High-Quality Content